Dr. James Enns is a Distinguished University Scholar in the Department of Psychology at UBC. His core areas of expertise include Cognitive Science and Developmental Psychology. In this Q and A, Dr. Enns shares the research taking place in his lab and as well as what the lab does for fun.

What’s the lab’s main research focus?

We’re called the UBC Vision Lab, but attention is really our main thing, and that’s sort of morphed over time. Fifteen or twenty years ago, we would have been studying perception of people doing things like button presses in response to visual search displays on the computer. But these days, we’re more likely to watch someone move with their whole body in response to maybe a big-screen display – so it’s much more real-world kinds of interactions.

We also study collaborative cognition. In real life, you’re often sitting in front of a screen with someone, working on a website or some data, collaboratively doing a task. Things we used to study with individuals, we study now in dyads.

The stimuli have also changed, so instead of showing people geometric shapes and things, we’re often showing them videos of other people. We’re studying the social perception of other people’s actions.

How did you become interested in this line of research?

Early on, as an undergraduate, I thought visual perception was the most exciting field. I had good mentors in that area, which made it easy to get really excited about it. I was fascinated by visual illusions.

Can you tell us about any exciting recent findings?

One paper that we have under review right now that my senior graduate student is in charge of is about the perception of other people’s actions. So for instance, if I was making a sales pitch to you, and you reached for your phone, it would be critical for me to know whether you’re essentially getting bored with what I’m saying and you wanted to check your email, or whether it vibrated or blinked. We’re measuring the observer’s (my) perception of the actor (you). So if the actor is driven by the external world – in other words, if the phone vibrated – that’s going to really change my perception of you, versus if you were internally motivated and reached for your phone to check your email.

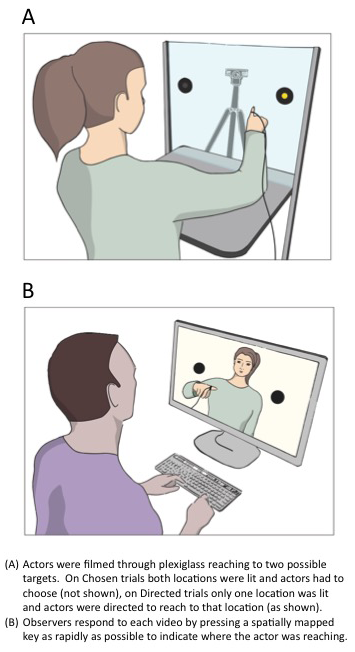

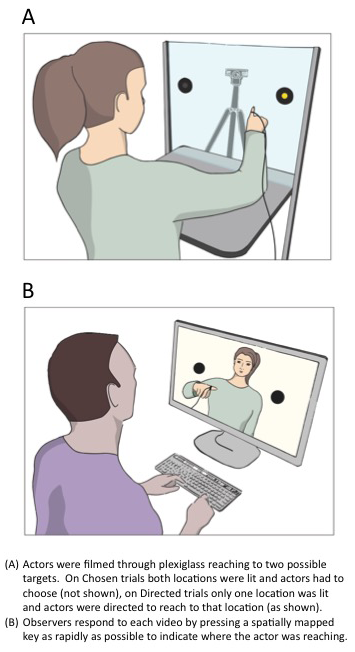

We mocked up a little game where recruited participants are responding by just taking their finger from a “home” position and reaching from one button to another on a plexiglass screen. They’re being videotaped. The key difference is that sometimes, they have to choose which button to touch. In other trials, they’re told which one to touch. So the observers (also recruited participants) are shown the videos later, and don’t know whether the actor is choosing or not. But as it turns out, the observers can actually tell whether the actors are choosing or not.

So we think we’ve got evidence for people being able to read other people’s minds through visual means. Nothing magical though, they’re just reading the kinematics.

The other really cool thing about that study is that if we measure people’s subclinical autistic tendencies, people that are more socially-oriented get a much bigger signal than people on the lower end of the scale. That really gives us confidence that we’re studying social perception. That’s because we can measure empathy with a pencil-and-paper test and predict your kinematic response to somebody. That’s a really cool result.

What type of new technologies are used in the lab?

For this research, you need a high-speed video camera and the ability to measure someone’s limbs in 3D when they’re in flight – in other words, you need some really expensive equipment. So how are we able to afford it? Well, about six or seven years ago, together with Alan Kingstone, we got a CFI grant for about $750,000 to buy all these toys. This allows our grad students to do things that no one else can do. They’ve got limb tracking, eye tracking, video and big screen displays…so we feel really fortunate. It was a really generous grant that definitely made things possible here that weren’t there before.

Tell us about the Vision Lab Team

I work with a postdoctoral fellow, Rob Whitwell, who studies how vision tells our muscles what to do when we reach out to manipulate objects. Rob’s research looks at the difference between two identified visual systems and how they talk to one another. The dorsal “action” system, which Rob says is “doing its thing in the background, unconsciously,” allows us to press elevator buttons without having to think about how far away the button is from our hand or in what direction. Then there’s the other system, the ventral “conscious perception” part. The conscious system is there to help us think about the world, recognize bits of it, and make decisions and form judgments about those bits. That system uses shortcuts to do these sorts of things, which is why visual illusions work. When we act on the world, however, shortcuts can be too risky – we might miss the target or fumble it – which is why our actions are less affected by illusions. Rob studies these systems in healthy individuals but also in patients that have damage to one system or the other. Rob has patients who are legally blind, they walk around with a white cane…but if you throw them a ball, they catch it. So that’s that action system at work.

This idea explains a lot of neuropsychological conditions that people suffer after brain damage.

Ana Pesquita, the Ph.D. student at the helm of the aforementioned mind-reading study, has also done really interesting work on how jazz musicians collaborate, and whether or not you can tell when you listen to jazz music whether the musicians are collaborating – the perception of social collaboration.

Two of the Vision Lab’s students just finished their Masters theses this summer and are transferring to the Ph.D. level. One of those students, Stefan Bourrier, studies how urban and natural environments affect people’s working memory. The other one, Pavel Kozik, is a student in both Cognitive and Health Psychology. He studies visual art, but with the particular goal of helping scientists put up big data displays in ways that are engaging and useful to the users.

Imagine your standard weather map painted like Van Gogh. His thesis shows that these types of images are more memorable, people can extract information from them better than the sort of tired old weather maps you’re used to seeing.

If you came here a month later, there’d also be ten or fifteen undergrads doing various projects. They come from [Psychology] 366, Honours, all the usual areas.

What does the lab do for fun?

Going for beers on Fridays. It’s a tradition.

View the Lab’s latest publications:

- 164. Chapman, C.S., Gallivan, J.P., Wong, J.D., Wispinski, N. J., & Enns, J.T. (in press). The snooze of lose: Rapid reaching reveals that losses are processed more slowly than gains. Journal of Experimental Psychology: General. [pdf]

- 163. Visser, TAW., Ohan, J.L., & Enns, J.T. (in press). Temporal cues derived from statistical patterns can overcome resource limitations in the attentional blink. Attention, Perception, & Psychophysics. [pdf]

- 162. Chapman, C.S., Gallivan, J.P & Enns, J.T. (advance online pub 2014): Separating value from selection frequency in rapid reaching biases to visual targets, Visual Cognition. [pdf]

- 161. Brennan, A.A. & Enns, J.T. (advance online pub 22 Nov 2014). When two heads are better than one: Interactive versus independent benefits of collaborative cognition. Psychonomic Bulletin and Review. [pdf]

- 160. Ristic, J. & Enns, J.T. (2015). The changing face of attentional development. Current Directions in Psychological Science, 24(1), 24-31. [pdf]

- 159. Visser, T. A., Tang, M. F., Badcock, D. R., & Enns, J. T. (2014). Temporal cues and the attentional blink: A further examination of the role of expectancy in sequential object perception. Attention, Perception, & Psychophysics, 76(8), 2212-2220. [pdf]

- 158. Pesquita, A., Corlis, C., & Enns (2014). Perception of musical cooperation in jazz duets is predicted by social aptitude. Psychomusicology: Music, Mind, and Brain, 24(2), 173-183. [pdf]

For full list of the Vision Lab’s publications click here.

-Katie Coopersmith

Lab of the Month is a monthly feature that highlights the research, goals, and people of UBC Psychology’s scientific laboratories.

Explore other UBC Psychology Labs

UBC Psychology lab gets high tech about social health

Dr. Todd Handy’s Attentional Neuroscience Lab